Understanding Rate Limiting: A Comprehensive Guide To Managing API Traffic

Rate limiting is a crucial concept in the world of web development and API management. It refers to the practice of controlling the number of requests a user or system can make to an API within a specific time frame. Whether you're a developer building applications or a business owner managing APIs, understanding rate limiting is essential for ensuring smooth operations and protecting your systems from abuse. This article will delve into the intricacies of rate limiting, exploring its importance, implementation, and best practices.

In today’s digital landscape, APIs are the backbone of countless applications and services. However, without proper management, they can become overwhelmed by excessive traffic, leading to performance issues or even complete downtime. Rate limiting acts as a safeguard, ensuring that APIs remain accessible and functional for all users. It is particularly critical for businesses that rely on APIs to deliver services to customers, as any disruption can have significant financial and reputational consequences.

This guide will provide you with a detailed understanding of rate limiting, including how it works, why it matters, and how you can implement it effectively. By the end of this article, you'll be equipped with the knowledge to manage API traffic efficiently and protect your systems from potential threats. Let’s dive into the world of rate limiting and uncover its many facets.

Read also:Renee Felice Smith A Rising Star In The Entertainment Industry

Table of Contents

- What is Rate Limiting?

- The Importance of Rate Limiting

- How Rate Limiting Works

- Types of Rate Limiting

- Implementing Rate Limiting

- Best Practices for Rate Limiting

- Common Mistakes in Rate Limiting

- Tools and Technologies for Rate Limiting

- Real-World Examples of Rate Limiting

- Conclusion

What is Rate Limiting?

Rate limiting is a technique used to control the number of requests a user or system can make to an API within a given time period. It is commonly employed to prevent abuse, ensure fair usage, and maintain the stability of the system. By setting limits on the number of requests, rate limiting helps prevent overloading and ensures that all users have access to the service.

For example, a weather API might allow 1,000 requests per day for free users and 10,000 requests per day for paid users. If a user exceeds their allocated limit, they may receive an error message or be temporarily blocked from making further requests. This approach helps maintain the integrity of the API and ensures that it remains available for all users.

Why Rate Limiting Matters

Rate limiting is particularly important in scenarios where APIs are exposed to the public. Without rate limiting, malicious actors could exploit the system by sending an overwhelming number of requests, leading to denial-of-service (DoS) attacks. Additionally, legitimate users could inadvertently overload the system by making too many requests in a short period, causing performance degradation for everyone.

The Importance of Rate Limiting

Rate limiting plays a critical role in maintaining the health and security of APIs. Below are some key reasons why rate limiting is essential:

- Preventing Abuse: Rate limiting helps protect APIs from malicious actors who may attempt to exploit vulnerabilities or disrupt services.

- Ensuring Fair Usage: By setting limits, rate limiting ensures that all users have equal access to the API, preventing any single user from monopolizing resources.

- Improving Performance: Limiting the number of requests reduces the load on servers, ensuring that the API remains responsive and reliable.

- Cost Management: For businesses that charge based on API usage, rate limiting helps control costs by preventing excessive usage.

Impact on User Experience

Rate limiting directly impacts the user experience. When implemented correctly, it ensures that APIs remain accessible and functional for all users. However, if rate limiting is too restrictive, it can frustrate users and lead to negative feedback. Striking the right balance is crucial for maintaining user satisfaction while protecting the system.

How Rate Limiting Works

Rate limiting operates by tracking the number of requests made by a user or system within a specific time window. When the limit is reached, additional requests are either denied or delayed until the next time window begins. There are several common algorithms used to implement rate limiting, including:

Read also:Top Windiest Cities In The Us Discover The Breeziest Urban Areas

- Token Bucket: This algorithm allows a certain number of tokens to be "spent" for each request. Tokens are replenished over time, enabling a flexible approach to rate limiting.

- Leaky Bucket: In this model, requests are added to a queue and processed at a fixed rate. If the queue becomes full, additional requests are dropped.

- Fixed Window: Requests are counted within fixed time intervals, and limits are reset at the start of each interval.

- Sliding Window: This approach provides a more granular view of request rates by dividing time into smaller intervals and calculating limits dynamically.

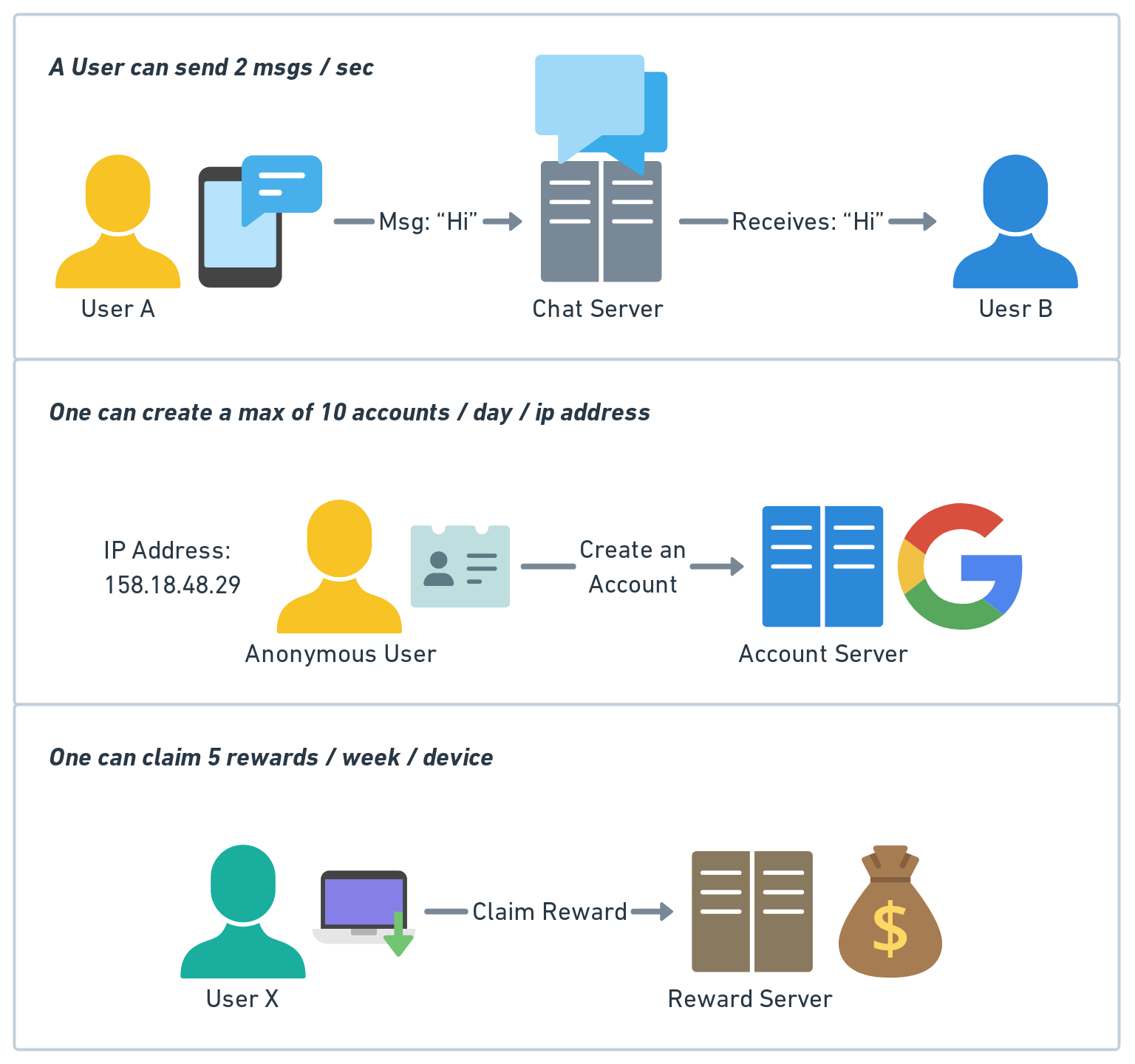

Example of Rate Limiting in Action

Consider a social media platform that allows users to post updates via an API. The platform might implement rate limiting to prevent spam by restricting users to 50 posts per hour. If a user attempts to post more than 50 times within an hour, the API will return an error message indicating that the rate limit has been exceeded.

Types of Rate Limiting

Rate limiting can be implemented in various ways, depending on the specific needs of the system. Below are some common types of rate limiting:

1. User-Based Rate Limiting

User-based rate limiting restricts the number of requests based on individual user accounts. This approach is commonly used in APIs that require authentication, such as social media platforms or cloud services.

2. IP-Based Rate Limiting

IP-based rate limiting limits the number of requests from a single IP address. While effective for preventing abuse, this method can be problematic for users behind shared networks, such as corporate environments or public Wi-Fi.

3. Global Rate Limiting

Global rate limiting applies limits across all users or systems. This approach is useful for protecting critical infrastructure or managing high-traffic scenarios.

4. Endpoint-Specific Rate Limiting

Endpoint-specific rate limiting sets different limits for different API endpoints. For example, a read-only endpoint might have a higher limit than a write endpoint due to the differing levels of resource consumption.

Implementing Rate Limiting

Implementing rate limiting requires careful planning and consideration of various factors, including the type of API, expected traffic patterns, and user requirements. Below are some steps to guide you through the process:

Step 1: Define Your Limits

Start by determining the appropriate limits for your API. Consider factors such as the expected number of users, the nature of the requests, and the resources required to process them. For example, a high-traffic API might require stricter limits than a low-traffic API.

Step 2: Choose an Algorithm

Select the rate-limiting algorithm that best suits your needs. The token bucket and sliding window algorithms are popular choices due to their flexibility and accuracy.

Step 3: Implement Rate Limiting

Rate limiting can be implemented at various levels, including the application layer, API gateway, or load balancer. Tools such as NGINX, AWS API Gateway, and Cloudflare offer built-in rate-limiting capabilities.

Step 4: Monitor and Adjust

Once rate limiting is in place, monitor its effectiveness and adjust the limits as needed. Analyze traffic patterns and user feedback to ensure that the limits are appropriate and not causing unnecessary restrictions.

Best Practices for Rate Limiting

To ensure that your rate-limiting strategy is effective and user-friendly, follow these best practices:

- Communicate Limits Clearly: Provide users with clear documentation on rate limits and how they are enforced.

- Use Graceful Degradation: When a user exceeds their limit, provide informative error messages and guidance on how to resolve the issue.

- Monitor Usage Patterns: Regularly analyze usage data to identify potential issues and optimize limits.

- Offer Tiered Limits: Provide different limits for free and paid users to encourage upgrades and accommodate varying needs.

Common Mistakes in Rate Limiting

While rate limiting is a powerful tool, it can be counterproductive if not implemented correctly. Below are some common mistakes to avoid:

1. Setting Limits Too Low

Setting limits that are too restrictive can frustrate users and lead to negative feedback. Ensure that your limits are realistic and aligned with user expectations.

2. Ignoring Edge Cases

Failing to account for edge cases, such as shared IP addresses or burst traffic, can result in unintended restrictions. Test your rate-limiting strategy thoroughly to identify and address potential issues.

3. Lack of Transparency

Users should be aware of rate limits and how they are enforced. Failing to communicate this information can lead to confusion and dissatisfaction.

Tools and Technologies for Rate Limiting

Several tools and technologies can help you implement rate limiting effectively. Below are some popular options:

- NGINX: A widely used web server and reverse proxy that offers built-in rate-limiting capabilities.

- AWS API Gateway: A managed service that provides rate-limiting features for APIs hosted on AWS.

- Cloudflare: A content delivery network (CDN) that includes rate-limiting functionality as part of its security features.

- Redis: An in-memory data store that can be used to implement custom rate-limiting solutions.

Real-World Examples of Rate Limiting

Many popular services use rate limiting to manage API traffic and protect their systems. Below are some examples:

1. Twitter API

Twitter enforces strict rate limits on its API to prevent abuse and ensure fair usage. Free users are limited to 500,000 tweets per month, while enterprise users have higher limits based on their subscription plans.

2. Google Maps API

Google Maps API uses rate limiting to manage usage and control costs. Free users are allowed 28,000 map loads per month, while paid users can purchase additional quotas.

3. GitHub API

GitHub enforces rate limits to protect its infrastructure and ensure reliable service. Authenticated users are allowed 5,000 requests per hour, while unauthenticated users are limited to 60 requests per hour.

Conclusion

Rate limiting is an essential practice for managing API traffic and ensuring the stability, security, and fairness of your systems. By understanding the principles of rate limiting and implementing it effectively, you can protect your APIs from abuse, improve performance, and enhance the user experience.

As you embark on your journey to implement rate limiting, remember to follow best practices, choose the right tools, and continuously monitor and adjust your strategy. Whether you're a developer, business owner, or IT professional, mastering rate limiting will empower you to build robust and reliable systems.

We hope this guide has provided you with valuable insights into the world of rate limiting. If you found this article helpful, please share it with your colleagues and leave a comment below with your thoughts or questions. For more information on API management and related topics, explore our other articles on this site.

The Allure Of The Lopsided Grin: Unpacking Its Charm And Significance

Natacha Teen: Unveiling The Rising Star In The Entertainment Industry

Parking For Red Head Piano Bar: A Complete Guide For Visitors

Rate Limiting Fundamentals by Alex Xu

How to fix you are being rate limited (Error 1015) on ChatGPT Dataconomy